Project Annotation Settings

Annotation Settings in Labelo allow you to control how tasks are labeled and managed within your project. Here’s a brief overview of the key settings:

Annotators

These are the users responsible for labeling or tagging tasks within the project.

Distribute Task Labeling

- Auto

- Automatically assigns tasks to annotators.

- No manual assignment needed; all project members (excluding reviewers) receive tasks.

- Manual

- Requires the project manager, administrator, or owner to assign tasks individually.

- Annotators can access tasks only after being manually assigned.

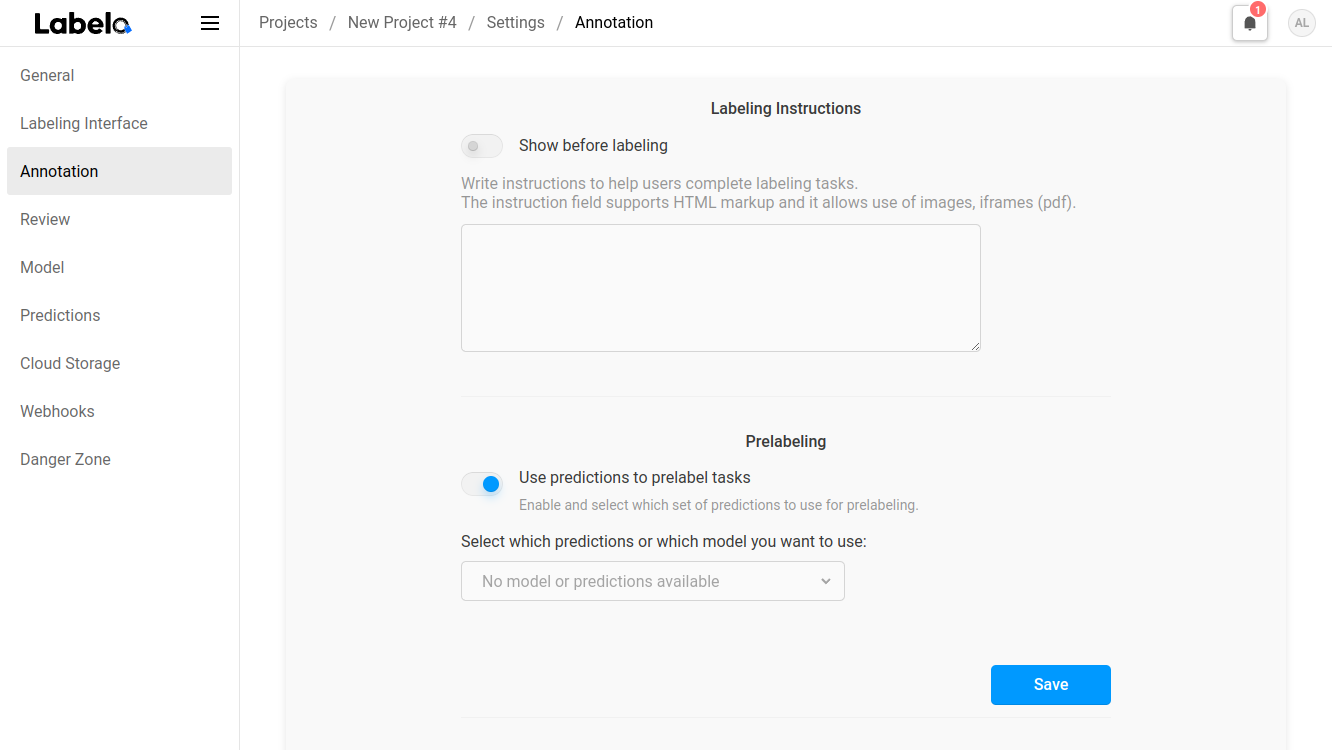

Labeling Instructions

- Provide instructions for the annotators in this field, which supports HTML formatting.

- Enable the

Show before labelingoption to present a pop-up message to annotators when they start labeling. If this option is turned off, annotators will have to click theShow instructionsbutton at the bottom of the labeling interface to access the instructions.

Skip Queue

The Skip Queue is designed to improve efficiency and manage workload by allowing annotators to skip tasks they find difficult or irrelevant.

This ensures that annotators focus on tasks they can handle effectively and helps maintain high-quality annotations.

1. Requeue Skipped Tasks Back to the Annotator

When an annotator skips a task, it moves to the end of their queue, and they will encounter it again as they progress through their assigned tasks.

- If the Annotator Leaves and Returns:

- Auto Distribution: They may see the skipped task again if it hasn’t been completed by another annotator. If it remains unaddressed, they can update and resubmit it.

- Manual Distribution: The skipped task will continue to appear in their queue until it is labeled.

Skipped tasks are marked as incomplete, impacting the overall project progress displayed on the project Dashboard, preventing it from reaching 100% if there are any skipped tasks.

2. Requeue Skipped Tasks to Others

When an annotator skips a task, it is removed from their queue and reassigned to another annotator.

- Once a Task is Skipped:

- If they complete their current tasks, they cannot return to the skipped task. The reassignment process depends on the distribution method:

- Auto Distribution: The task is automatically assigned to another annotator.

- Manual Distribution: A project manager must manually reassign the task.

- If they complete their current tasks, they cannot return to the skipped task. The reassignment process depends on the distribution method:

If no other annotators are available, or if all annotators skip the task, it will remain incomplete, which will affect the overall project progress on the Dashboard.

3. Ignore Skipped Tasks

- Auto Distribution: When a task is skipped, it is marked as completed and removed from the original annotator’s queue. If further annotations are needed, it will be assigned to other annotators until all requirements are met.

- Manual Distribution: Skipped tasks are taken out of the original annotator's queue but remain in the queues of other annotators.

Annotating Options

- Show Skip Button:

- Control the visibility of the

Skipbutton for annotators.

- Control the visibility of the

- Allow Empty Annotations:

- This setting determines if annotators can submit tasks without making any annotations. When enabled, tasks can be submitted even if no labels or regions are added, resulting in empty annotations.

- Show Data Manager to Annotators:

- If this option is disabled, annotators will only have access to the label stream. When enabled, they can use the Data Manager to select tasks from a list. Note that certain information will remain hidden from annotators; they will only see a limited subset of Data Manager columns and will not have access to details like Annotators, Agreement, or Reviewers.

- Annotators Must Leave a Comment on Skip:

- When this setting is activated, annotators are required to provide a comment each time they skip a task.

Pre Labeling

If you are using an ML backend or model, or leveraging Prompts for predictions, you can adjust the settings to automatically pre-label tasks based on these predictions. Configure this feature by selecting the prediction source from the drop-down menu.

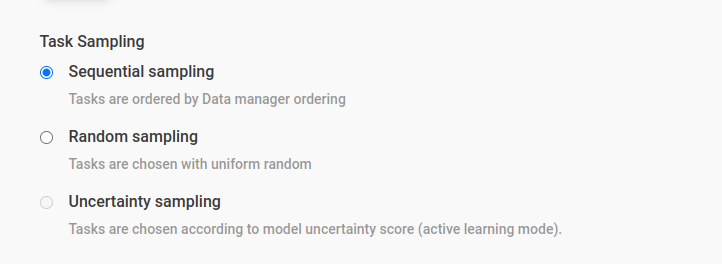

Task Sampling

Configure the sequence in which tasks are shown to annotators.

| Sampling Method | Description |

|---|---|

| Uncertainty Sampling | When using a machine learning backend with active learning, this option helps improve your model. Labelo picks tasks where the model is least confident or most uncertain. This reduces the amount of data that needs labeling while enhancing model performance. |

| Sequential Sampling | Tasks are presented to annotators in the order they appear in the Data Manager. |

| Random Sampling | Tasks are displayed in a uniform random order. |

Effectively managing your annotation settings in Labelo can greatly enhance your project’s efficiency and model performance. By selecting the appropriate task distribution and sampling methods, you can streamline workflow and improve the quality of your annotations.