Annotation Reviewing

In machine learning and data science, model performance heavily relies on the quality of labeled data. Labelo is a robust tool built for data labeling and annotation. In this documentation, we’ll delve into how to review labeled data using Labelo, ensuring your dataset is accurate, consistent, and prepared for model training.

TIP

Reviewing improves the accuracy, consistency and quality of your dataset.

Review Workflow

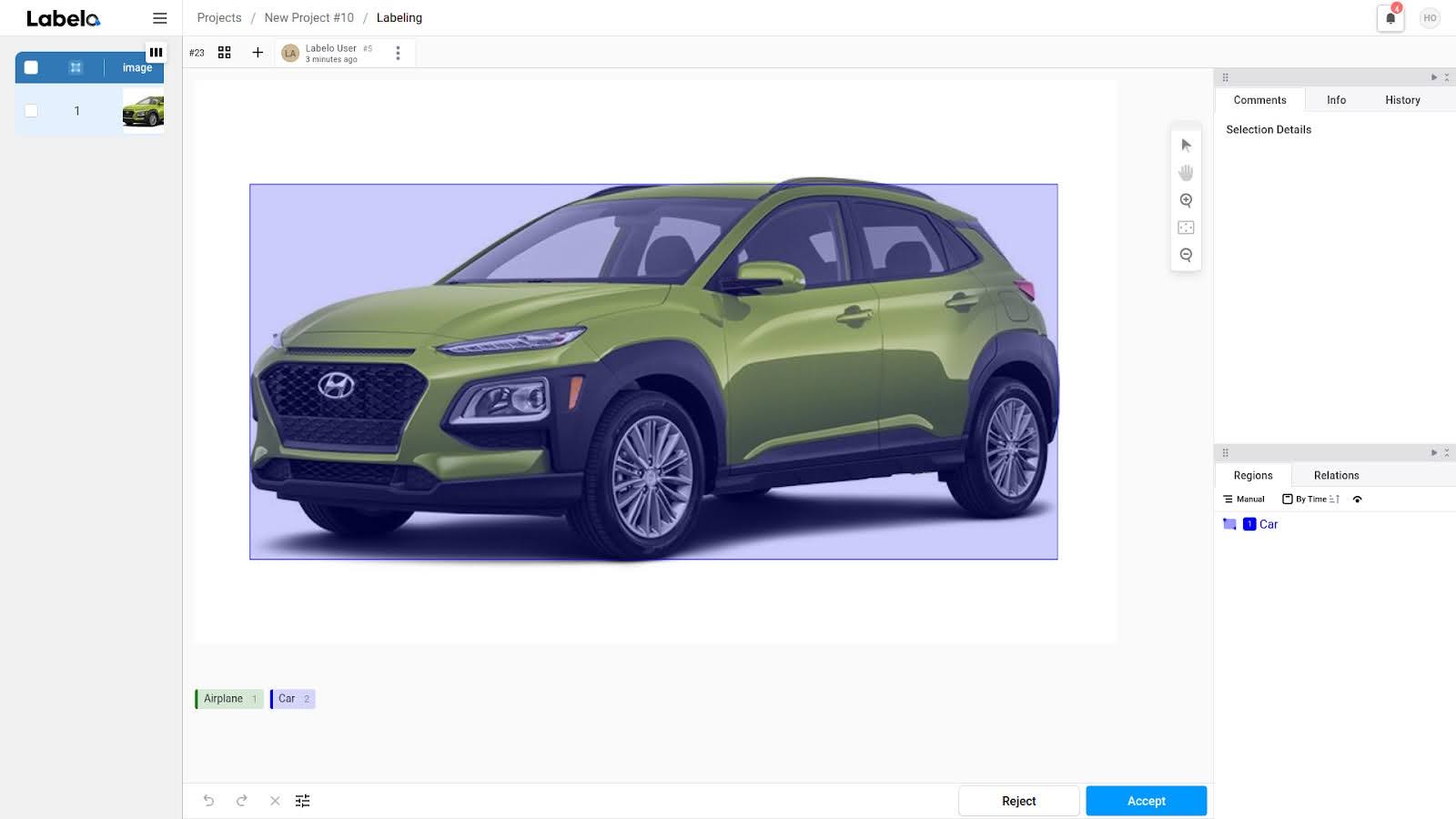

1. Once a task is labeled, navigate to the labeled task in the project which you want to perform review.

Once a task has been labeled in Labelo, the next critical step is to review the annotations to ensure accuracy and consistency before finalizing the dataset. The review process is essential for maintaining high data quality, especially in machine learning projects where the precision of labeled data directly impacts model performance.

2. Inspect the Labeled Annotations.

Once you open the labeled task, carefully review the annotations applied to the data. This involves examining whether the correct labels have been assigned, if the shapes (such as bounding boxes or polygons) are accurate, and whether any attributes associated with the labels (e.g., confidence scores or classification categories) are correct.

3. Make Corrections if Needed

If you identify any mistakes or inconsistencies during the review, Labelo allows you to make immediate corrections to the labeled data. You can adjust the labels, shapes, or other annotations directly in the task interface.

4. Approve or Reject the Labeled Task

Once you are satisfied with the quality of the annotation, you can approve the labeled task, marking it as ready for inclusion in the final dataset. If the task still needs adjustments, you can reject it, providing feedback to the annotator for further revisions. This cycle ensures that the final dataset meets the highest standards of accuracy and quality.

NOTE

All organization members in the project except annotators have the access for reviewing the data.